This Post

Service Mesh - ASM and ISTIO

ASM uses an implementation of ISTIO, but like GKE is a implementation of kubernetes, ASM is kind of the managed version of ISTIO. I have seem people manage their own ISTIO, just like people can manage their own kubernetes control plane - I have done it in the past and I think there are very few reasons to want to manage our own but it really depends on what is needed.

- If we need more control then maybe ISTIO is the right choice.

- If we just want to use it and not worry about scaling, securing, configuring, etc then ASM is the way to go.

Using ASM is kind of the same as using ISTIO… but without having to worry about ISTIO’s control plane and other tasks to maintain ISTIO…

ASM in your GKE clusters

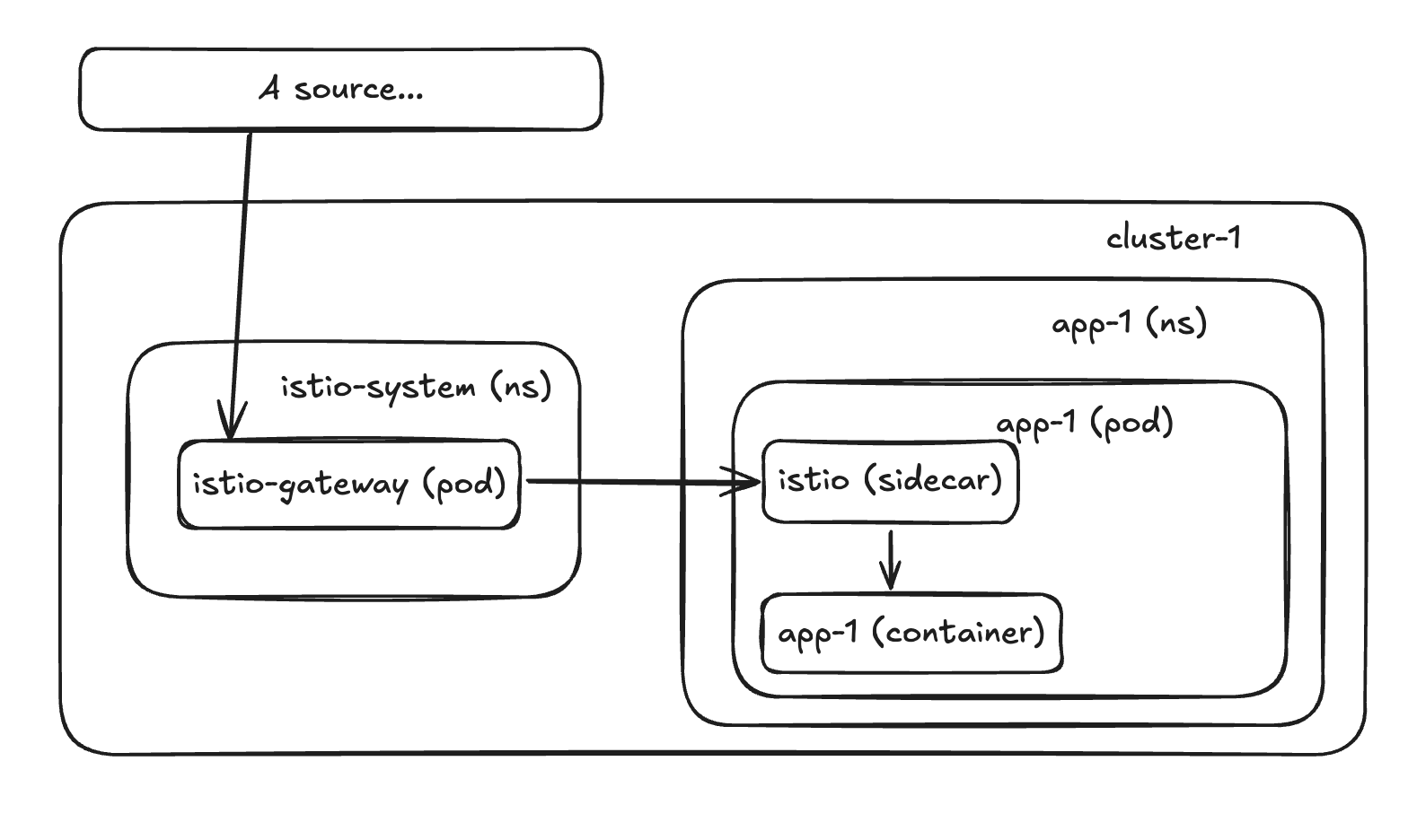

High level, this is how it looks like:

Where: istio (sidecar) == envoy (sidecar)

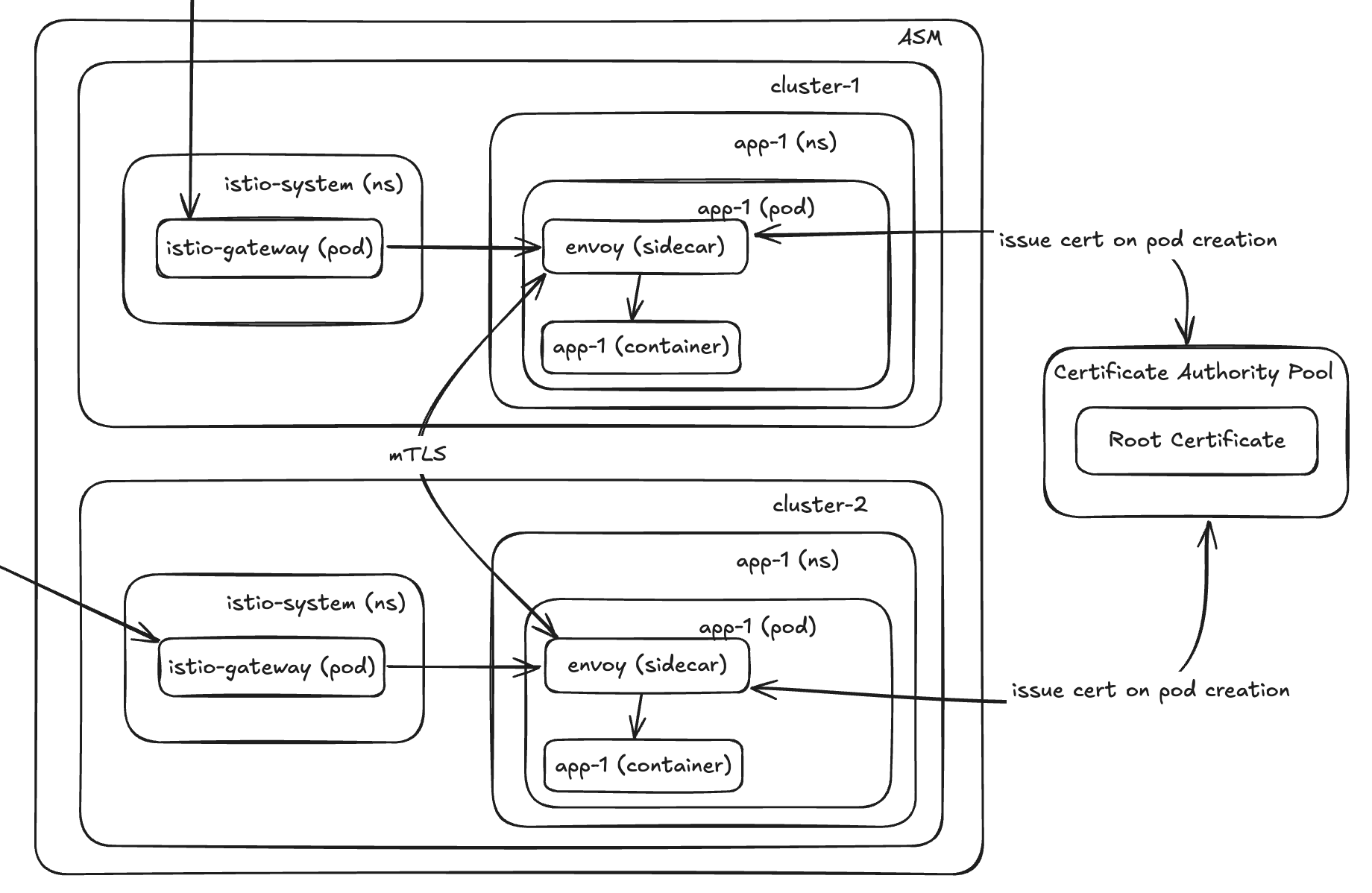

Let’s build on top of this! There are a few nice things about using ASM over ISTIO as it integrates well with other Google features. Two main things I like about it:

- Use Google CA Pool to manage your ASM certs for mTLS.

- Google logging and observability already integrated.

Some key notes:

- The more traffic the more istio-gateway pods needed.

- You can have many different istio-gateways for different hosts and/or apps to decrease impact but scaling them is the same.

- Routing from istio-gateway needs the Gateway (in istio level), Network Policies, VirtualService and Service (the 3 in app level) to be properly configured.

- If running hot/cold or hot/warm DO NOT give the same name to VirtualServices across clusters as ASM gets confused and will route some traffic to the cold/warm replica.

For more ASM content go to: ASM tags

Receiving External Traffic…

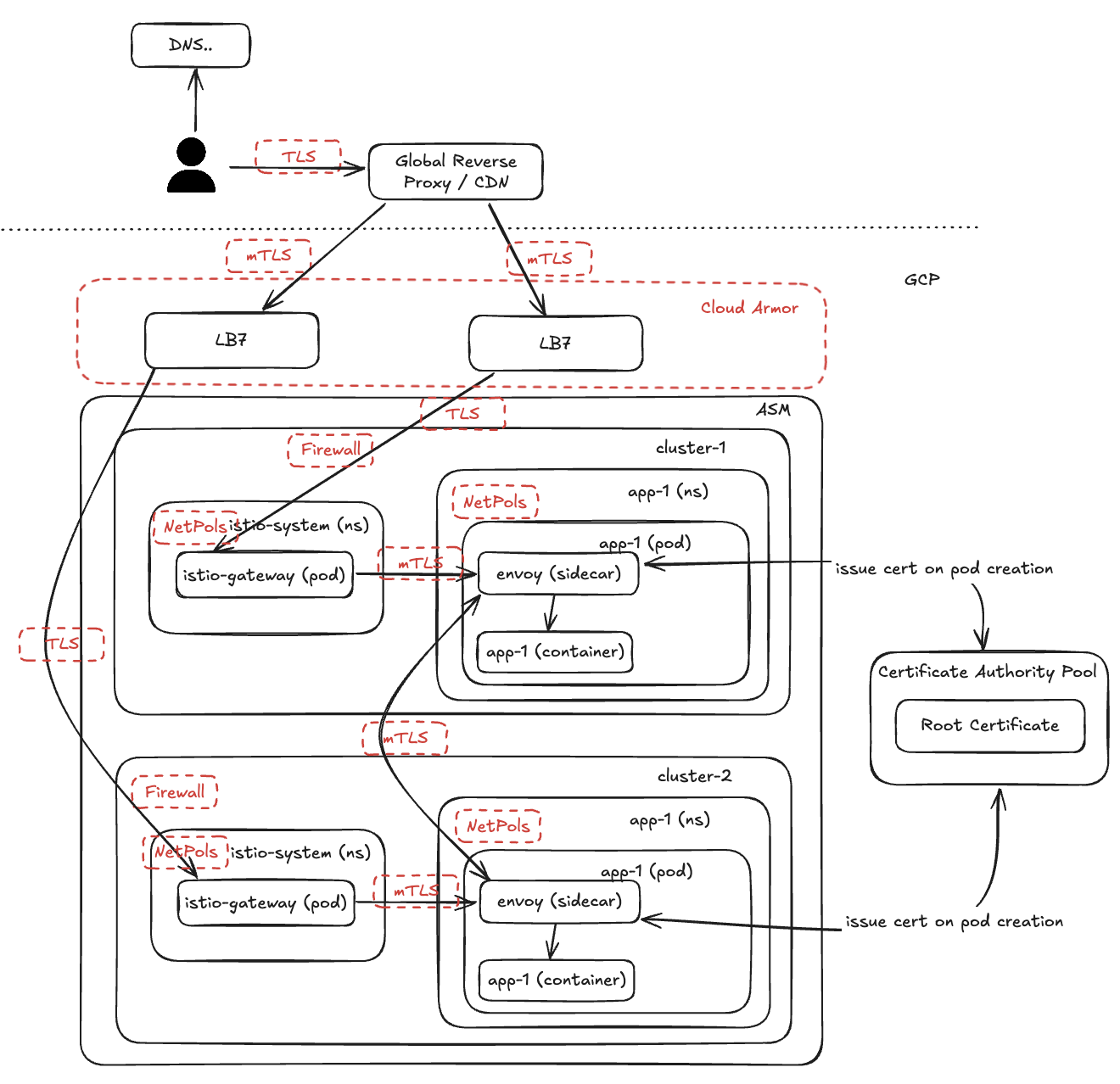

Exposing services to external users can be as simple as just opening up a port in your VM that has a public IP or it can go through different layers of protection. We will go through some layers in this section.

First, make sure to get it through a reverse proxy with DDoS, WAF, etc protection such as Cloudflare or Akamai.

Second, whatever you have in that edge (a L7 LB, for example), make sure to:

- Only accept traffic from your global edge platform (allow list)

- Setup mTLS if possible

- Configure Cloud Armor WAF to accept a specific header that your global edge platform can send in the requests

Kind of a given, but in general:

- prefer mTLS over TLS

- make sure you limit the sources that can talk to your infrastructure

- it’s preferred to have several layers of WAF and DDoS protection

- rate limiting can be key depending on what you are exposing

- allow only necessary IPs or ranges in your firewall and netpols - it can be painful to manage firewall tags if you manage several regions so use a hierarchical scheme so you can repeat some tags across clusters/regions.

Putting Things Together

In addition to how we have secured so far we can also use:

- Security Command Centre Enterprise

- Organization Policies

- VPC-SC (Virtual Private Cloud Service Controls) - I love this one.. it’s kind of a “firewall for API calls”